The focus of this piece is deliberately on the hardware, but as is common with a new architecture launch, Nvidia has made available details of a number of new shading techniques that increase flexibility of its GPUs and potentially improve performance when used correctly. We’ll give brief descriptions of these, add a few closing thoughts, and then leave you to get on with your weekend.

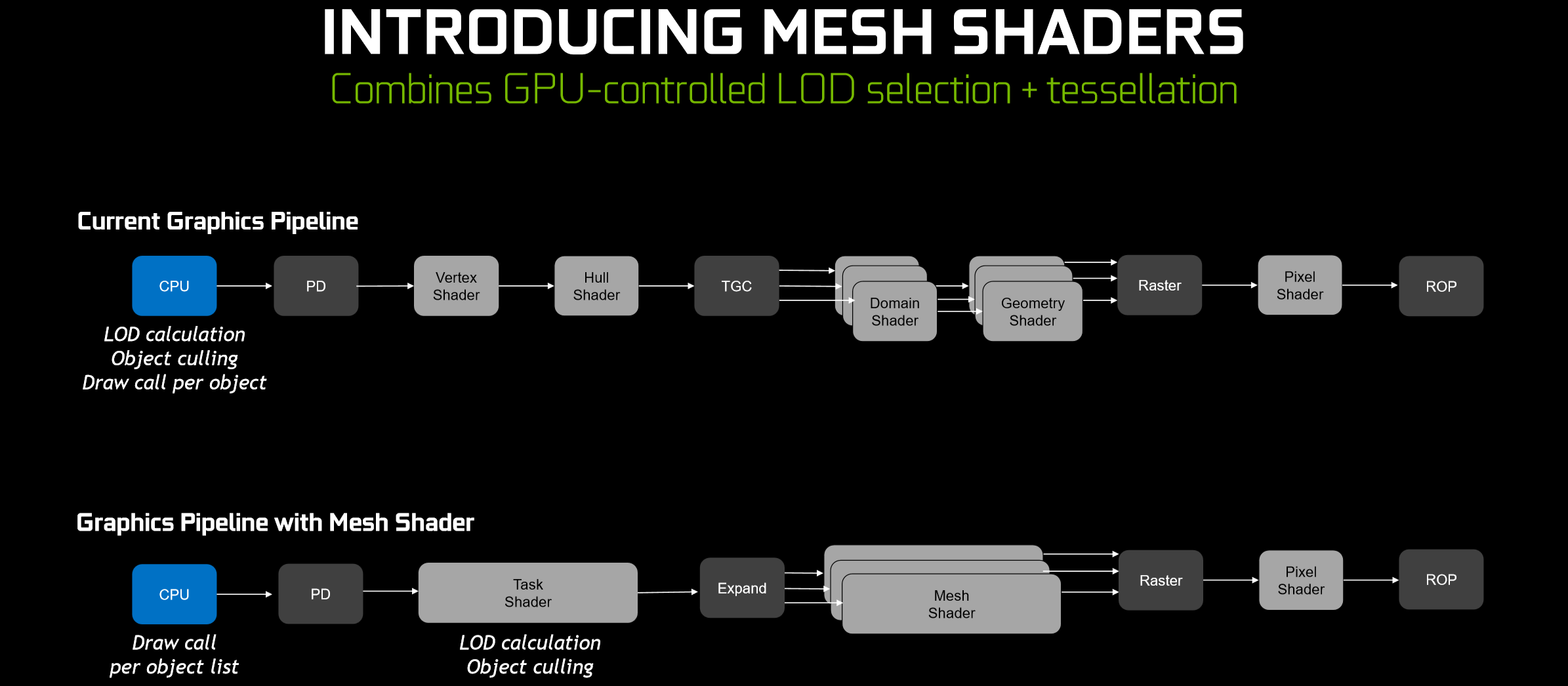

Mesh Shading is a means of leverage the highly parallel nature of GPUs to increase significantly the number of possible objects in a scene. Instead of being issued individually for each object, draw calls are arranged into lists and submitted to the GPU which culls unnecessary ones and selects the right level of detail/tessellation based on factors like proximity.

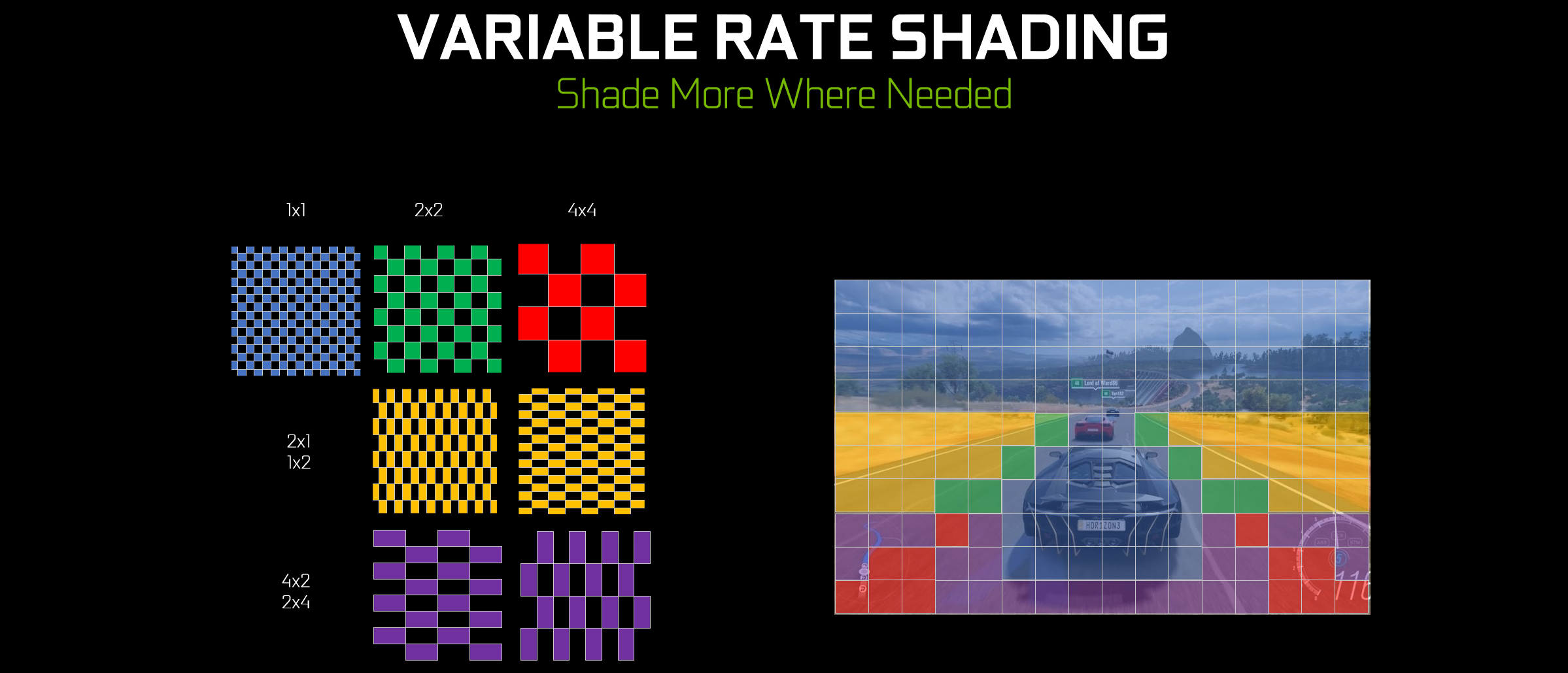

Variable Rate Shading allows developers to alter the rate of shading dynamically on a 16 x 16 pixel basis, the main thinking being there are often areas of the screen where a full shading rate would bring no benefit and simply costs performance. All sorts of factors like spatial/temporal colour coherence between pixels (Content Adaptive Shading), motion vectors from temporal anti-aliasing (Motion Adaptive Shading), and viewing angle in VR (Foveated Rendering) can be used to control and later the shading rate on-the-fly.

Texture Space Shading allows shading values to be computed and stored in a texture as texels. When pixels are texture-mapped, the pre-computed values can be called upon from texture space. One particular area of benefit is VR where one screen has many of the same objects in screen space as the other, so once objects are shaded on one screen they can be easily replicated in the other without the full shader workflow repeating.

Multi-View Rendering is an extension of Pascal’s Simultaneous Multi-Projection functionality and is about drawing a scene from multiple viewpoints in a single pass. Now, there are a greater number of views supported per pass (four not two) and views no longer need to be share certain attributes, so it’s more flexible. Anything that’s independent of viewpoint is calculated once and shared across views, and then view-dependent attributes are calculated once per view.

Closing Thoughts

Ordinarily, content like this would be included in the review of the cards themselves, but since we’re currently under NDA with regards to performance, we have to let it stand alone for now. We'll be covering more about the specifics of the cards in our full reviews such as design, cooling, overclocking, etc., but wanted to let this article be purely about the new architecture.

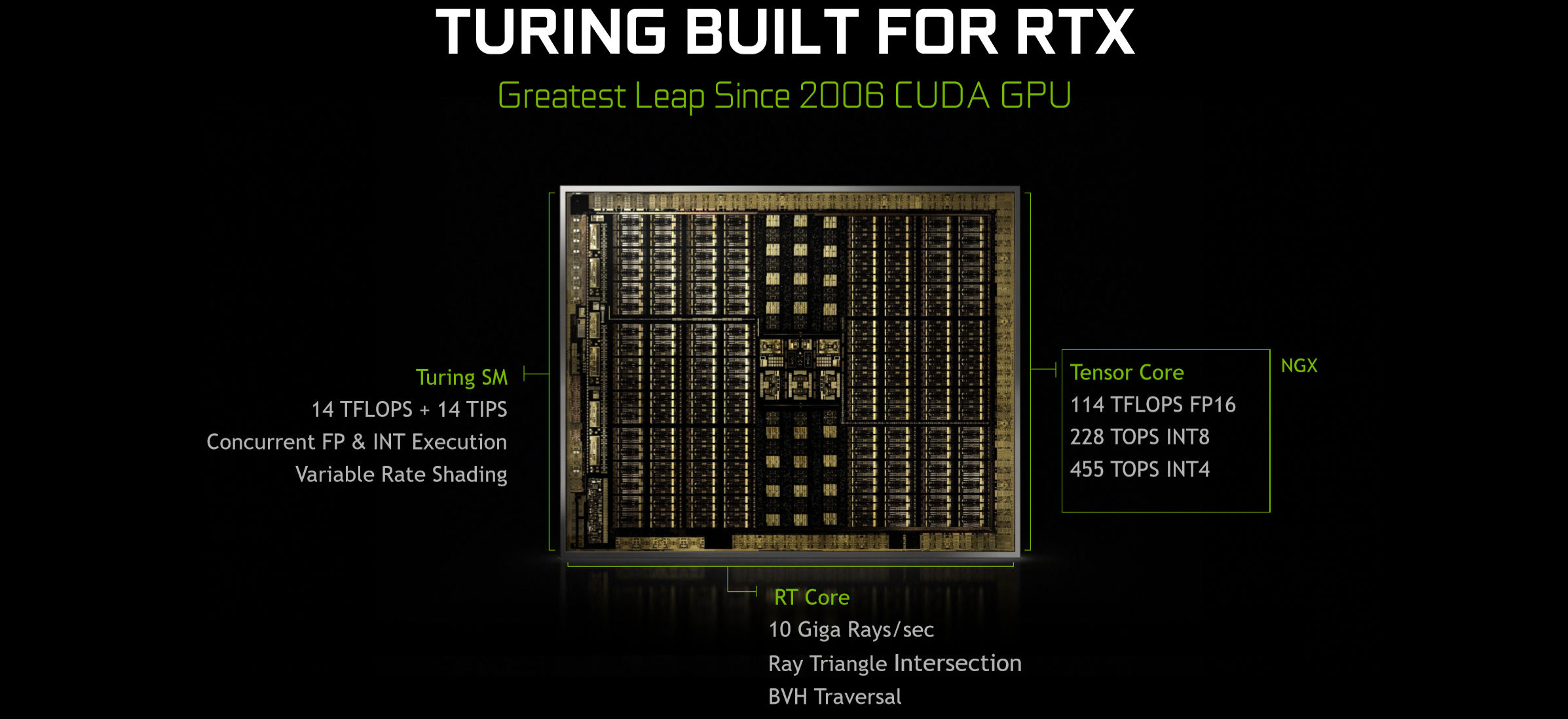

The Turing architecture is clearly an interesting one on many fronts. The addition of INT32 cores, Tensor Cores, and RT Cores to the SM are all significant hardware changes that either free up regular cores for performance (INT32) or make very interesting things possible with games themselves (Tensor and RT). However, exactly how they will play out in software remains to be seen.

With more cores, similar clock speeds, and faster memory than equivalent (in name) GTX parts, the new RTX cards are clearly going to be fast compared to what we’re used to. But the question of just how fast has just become very workload-dependent indeed. When it comes to existing games, you have to imagine that code heavy on integer math will see the greatest benefit, so quite how that shapes up in frame rates will be interesting to see.

Sadly, existing games look like the only thing we’ll be able to put through the labs, at least for launch. DLSS enabled by the Tensor Cores and ray tracing enabled by RT Cores are undoubtedly cool, sci-fi-sounding things, but where they are concerned Nvidia currently holds all the keys. While we’ve done our best to remain level-headed here, the truth is that our information comes direct from Nvidia: Nvidia controls the messaging. This is nothing new with a launch like this, of course, but what is less common is the inability to put important elements of the samples under the microscope, as based on current lists of games it doesn’t look like DLSS or RTX are going to be testable before launch. Nvidia will thus initially be asking users to lay down considerable money in the hope that both techniques can be delivered effectively. To be honest, we don’t really doubt Nvidia on the technical side of it – DLSS and RTX have been demoed and appear to do what is claimed – but by effective we mean doing this without having such an impact on performance so as to make the techniques suddenly seem a lot less attractive. This of course involves both game developers and Nvidia, and if releases (and patches) supporting these features are trickled out, the Turing story could be one that takes some time to play out fully. Nonetheless, we’ll do our best to tell it – stay tuned!

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.